Meta’s Quest SDK Update: Thumb Microgestures and Enhanced Audio to Expression

Table of Contents

- 1. Meta’s Quest SDK Update: Thumb Microgestures and Enhanced Audio to Expression

- 2. Thumb Microgestures: A New Era of hand Tracking

- 3. Improved Audio To Expression: Enhanced Avatar Realism

- 4. how do the new thumb microgestures in Meta’s Quest SDK update improve accessibility in VR experiences?

- 5. A Leap Forward in VR: Interview wiht Kai Arram, Lead VR Interaction Designer

- 6. Thumb Microgestures: A Game Changer for VR Interaction?

- 7. Enhanced Audio to Expression: Avatars Get Real

- 8. The Future of VR Development

Meta’s latest SDK update for Quest headsets introduces innovative features, including thumb microgestures for more intuitive control and improved Audio To Expression for more realistic avatar interactions.

Thumb Microgestures: A New Era of hand Tracking

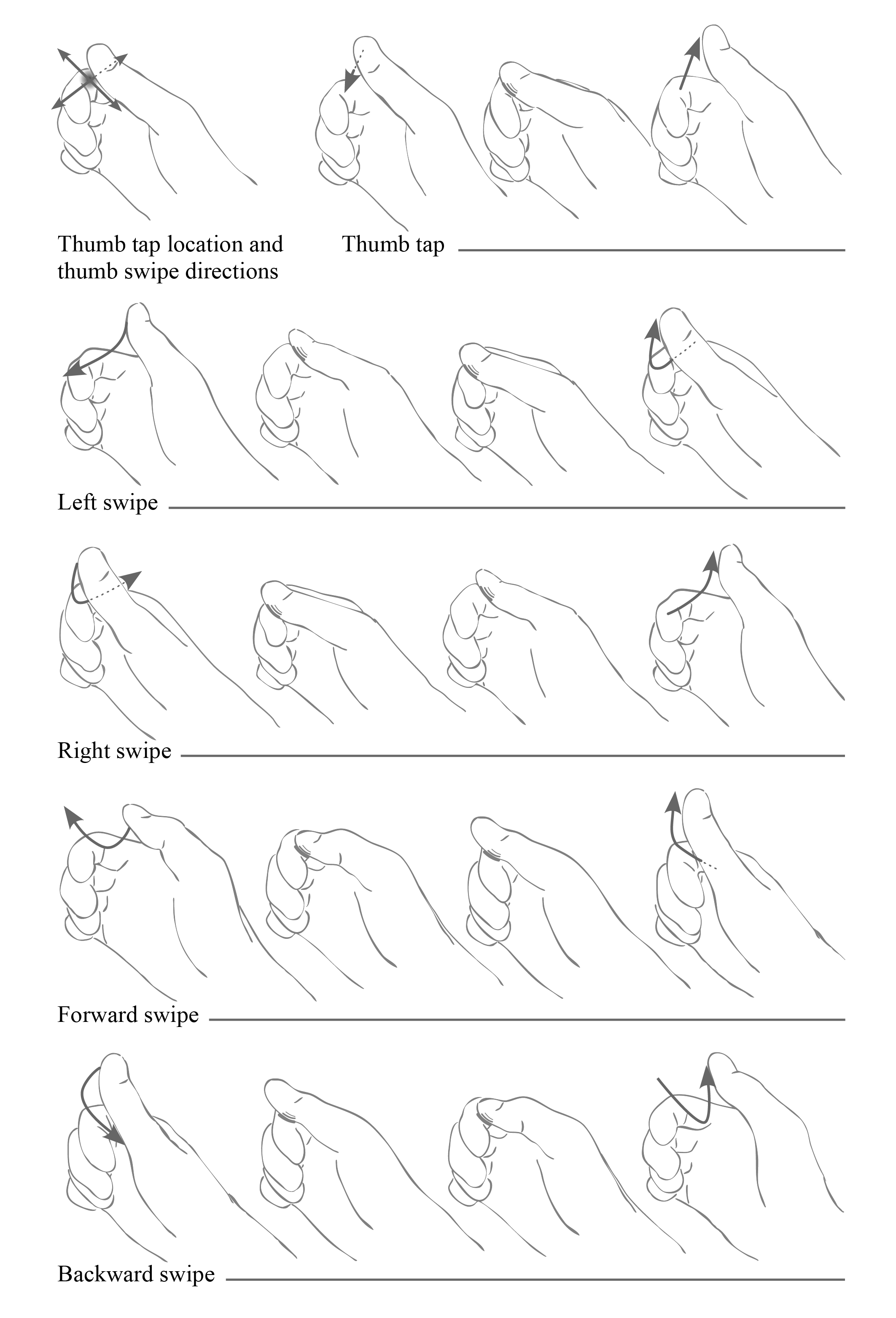

The update brings microgestures to Quest, leveraging the headset’s controller-free hand tracking capabilities. These microgestures allow users to tap and swipe their thumb on their index finger, mimicking a D-pad. This functionality is available through the Meta XR Core SDK for Unity and the OpenXR extension XR_META_hand_tracking_microgestures for other engines.

Meta suggests these gestures could enable actions like teleportation and snap turning without traditional controllers. “Meta says these microgestures could be used to implement teleportation with snap turning without the need for controllers, though how microgestures are actually used is up to developers.” This opens up possibilities for developers to create more intuitive and immersive experiences.

Beyond gaming, microgestures offer option UI navigation methods. “Developers could, for example, use them as a less straining way to navigate an interface, without the need to reach out or point at elements.” This hands-free approach could be particularly useful in productivity and accessibility applications.

Interestingly, Meta is also exploring this thumb microgesture approach for its sEMG neural wristband, intended as an input device for future AR glasses. This suggests that Quest headsets could serve as a development platform for these upcoming technologies, enabling developers to experiment with microgestures in VR before they become commonplace in AR.

Improved Audio To Expression: Enhanced Avatar Realism

The SDK update also features an enhanced Audio To Expression model. This on-device AI model generates realistic facial muscle movements from microphone audio input, creating estimated facial expressions without dedicated face-tracking hardware.

Audio To Expression replaced the older Oculus Lipsync SDK. Meta claims Audio To Expression actually uses less CPU than Oculus Lipsync did. The new v74 of the Meta XR Core SDK brings an upgraded model that “improves all aspects including emotional expressivity, mouth movement, and the accuracy of Non-speech Vocalizations compared to earlier models.”

Notably, Meta’s current Avatars SDK does not yet use audio To Expression or inside-out body tracking. The integration of these technologies could significantly enhance the realism and expressiveness of avatars.

The latest Meta Quest SDK update marks a step forward in VR interaction and avatar realism. With thumb microgestures and improved Audio To Expression, developers have new tools to create more intuitive, immersive, and engaging experiences. Explore how these features can elevate your VR applications and user experiences.

how do the new thumb microgestures in Meta’s Quest SDK update improve accessibility in VR experiences?

A Leap Forward in VR: Interview wiht Kai Arram, Lead VR Interaction Designer

Archyde News had the opportunity to sit down with Kai Arram, Lead VR Interaction Designer at ImmersiaTech, to discuss the exciting new features in Meta’s latest Quest SDK update. Kai, welcome!

Thumb Microgestures: A Game Changer for VR Interaction?

Archyde: Kai, the new Quest SDK boasts thumb microgestures for hand tracking.what’s your take on this – is it truly a notable advancement?

Kai Arram: Absolutely! Thumb microgestures have the potential to revolutionize VR interaction. Think about it – no more fumbling for controllers when you want to teleport or navigate a menu. The ability to tap and swipe on your index finger, mimicking a D-pad, offers a far more intuitive and seamless experience. We’re already brainstorming ways to integrate it into our next VR experience.

Archyde: Meta suggests that thes gestures could be used for teleportation and snap turning. What other innovative applications do you foresee for these microgestures, especially beyond gaming?

Kai Arram: The possibilities are vast. We could see microgestures used extensively in productivity apps. Imagine navigating complex spreadsheets or architectural designs with subtle thumb movements. It’s a less straining and more natural way to interact with virtual environments,notably beneficial for accessibility.

Enhanced Audio to Expression: Avatars Get Real

Archyde: The SDK update also features an improved Audio to Expression model. How does this enhance avatar realism, and why should developers be excited?

kai Arram: The enhanced Audio to Expression significantly boosts the realism of avatars. By generating facial muscle movements from audio input even without dedicated face-tracking hardware, avatars feel more alive and responsive. The improvements in emotional expressivity and mouth movement are noticeable. This means players will forge much stronger connections with the characters they encounter.

Archyde: Does this mean we can say goodbye to uncanny valley?

Kai Arram: I think we still have a way to go before we entirely conquer the uncanny valley. That being said, improvements like these do make a noticeable difference with the level of immersion a VR user is able to achieve.

The Future of VR Development

Archyde: Meta is exploring these microgestures for future AR glasses. What does this suggest about the Quest headsets’ role in developing future technologies?

Kai Arram: It positions the Quest as an essential development platform.Developers can experiment with and refine microgestures in VR now, preparing them for widespread adoption in AR. This allows us to prototype and iterate in a more contained and established ecosystem.

Archyde: what’s the single most exciting aspect of this SDK update for you as a developer, and what kind of experiences can we expect to see consequently?

Kai Arram: For me, the ability to prototype new interaction models quickly is the most thrilling part. This update allows to rapidly iterate and the ability to leverage hand tracking in a more meaningful way. I anticipate seeing more immersive and intuitive VR games. Imagine puzzle games or titles that require tactile and dexterous handling of objects.

Archyde: That’s fantastic! Kai Arram, thank you for sharing your insights with us today! Before we dismiss you, what piece of advice would you give to any VR developers reading this?

Kai Arram: Don’t be afraid to experiment! These new tools open up a realm of possibilities. Try new things, break the mold, and most importantly, listen to user feedback.

Archyde: Great advice. We’ll leave it there.Thank you for your time!

Archyde: Readers, what are your initial thoughts on these new VR capabilities? What applications of thumb microgestures and enhanced audio to expression are you most excited about? Share your thoughts in the comments below!